Ah, bioinformatics, the intersection of biology, computing, and all things sequencing. For some, it’s a magic key, allowing us to unravel the secrets of life; for others a complete nightmare of software issues, crashes, and endless bugs. Fortunately, there’s never been an easier time to learn with the abundance of resources and tools. Not so fortunately, there’s often too much out there, causing further headaches and discouragement. In this post, we’ll approach bioinformatics in a digestible, beginner-friendly manner, focusing on the why and the how. We’ll use what I believe to be the best way to learn: reproducing results from established methods. So whether you’re a bench scientist who has installed R, but never opened it, or someone with nightmares of failed package installations, or someone who has just been putting off learning, I hope this post will encourage you to take a plunge into the wonderful world of bioinformatics!

Today, the ability to decipher and analyze biological data is a skill of paramount importance in research, no matter your role. Here, we will be reproducing the Seurat [5.0] Guided Clustering Tutorial from the Satija Lab using our ComputeBench, a cloud-based platform for computational analysis. Up-to-date, expertly curated software packages for the life sciences are readily available for users to dive into including ready-made blueprints for RNA-seq analysis, metagenomics, and more.

The tutorial revolves around one of the most integral components of bioinformatics: analyzing single-cell RNA (scRNA) sequence reads. Specifically, we will aim to identify distinct cell populations within different samples, identify rare cell types, and understand cellular heterogeneity within tissues or organisms.

What is Single-cell RNA sequencing? What about Seurat?

Single-cell RNA sequencing (scRNA-seq) allows researchers to explore the gene expression profiles of individual cells, providing a detailed view of cellular heterogeneity. This technology is essential throughout biology as it helps identify rare cell populations, observe how cells respond to different conditions, and understand the processes of cell differentiation. The data generated from scRNA-seq is massive, often containing millions of reads. As you can imagine, this is not very useful to us in its raw form. However, with further processing and analysis, scientists (and soon you!) can quantify the expression levels of genes across thousands of cells to reveal cell types, states, and responses within a complex tissue or organism.

Seurat is an R package that provides a comprehensive framework for preprocessing, analyzing, and interpreting single cell genomics data. It offers a full suite of tools for the entire scRNA-seq analysis pipeline from data pre-processing to interpretation and visualization. Its flexible and modular design allows researchers to customize their workflows to suit their specific experimental needs and hypotheses.

Since Seurat was released in 2015, it has become a hallmark bioinformatic package used by bioinformaticians across multiple fields. One example of Seurat’s application is “Ageing hallmarks exhibit organ-specific temporal signatures“ by Schaum et al. (2020), which investigated the aging process across multiple organs of house mice using sc-RNA seq data and revealed key insights into the molecular mechanisms underlying aging. Their results suggest that the aging process is not uniform across the body, but is instead finely tuned to the specific needs and functions of each organ.

ComputeBench Setup

Our ComputeBench is a cloud-based environment for data analysis in computational biology and bioinformatics. It allows scientists to work and code directly in a browser with pre-installed packages such as Seurat— eliminating the hassle with software installation, software configuration, and running computational resources in the cloud.

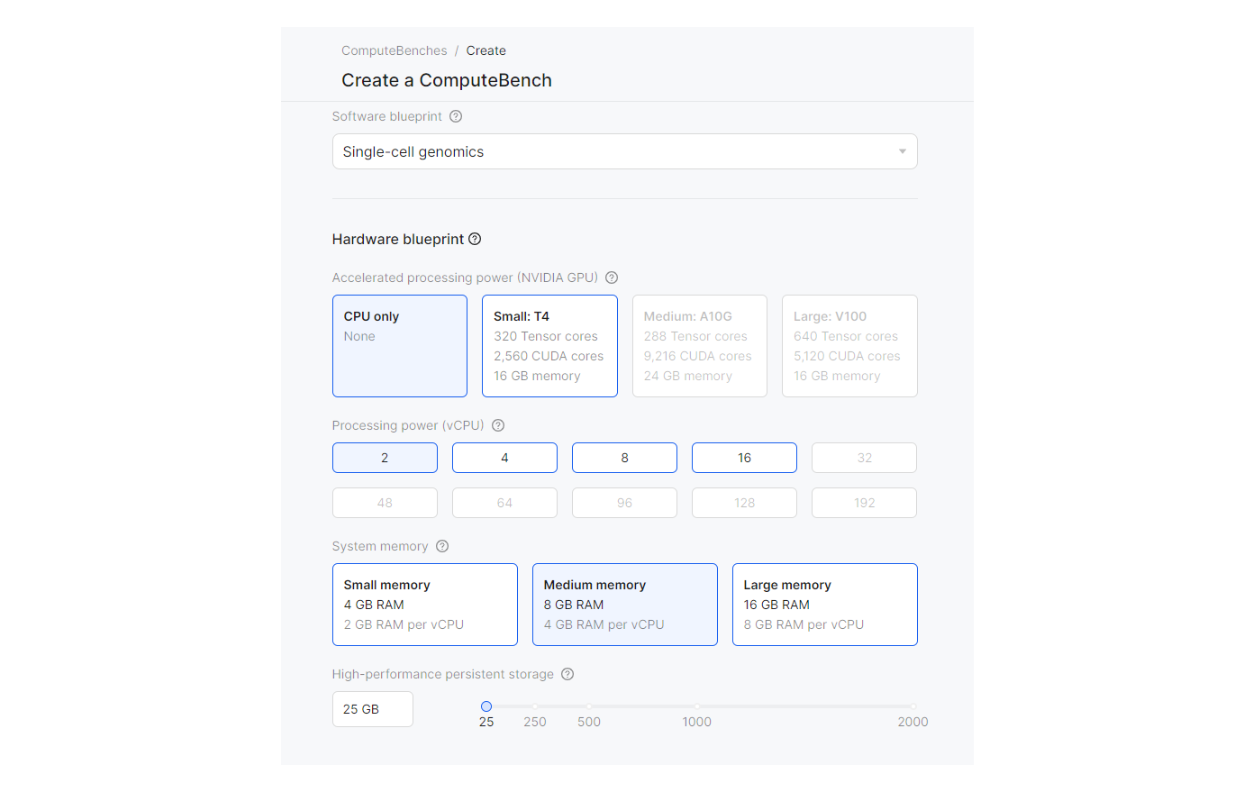

After logging into Deep Origin OS, create a ComputeBench by clicking the “+ New bench” button and set up the bench with the Single-cell genomics software blueprint and the default hardware configurations. (If you do not yet have a Deep Origin account set up, you can request access here). For more information, see our documentation.

The guide we are following is based on the Seurat package in R—luckily for us, there’s already a blueprint ‘Single-cell genomics’ ready for single cell analysis and this tutorial. We’ll be using the libraries Seurat, dplyr, Patchwork, and Tidyverse which are available in this blueprint. Outside of pre-made blueprints, users can also easily customize ComputeBenches according to their specific needs such as installing additional R packages.

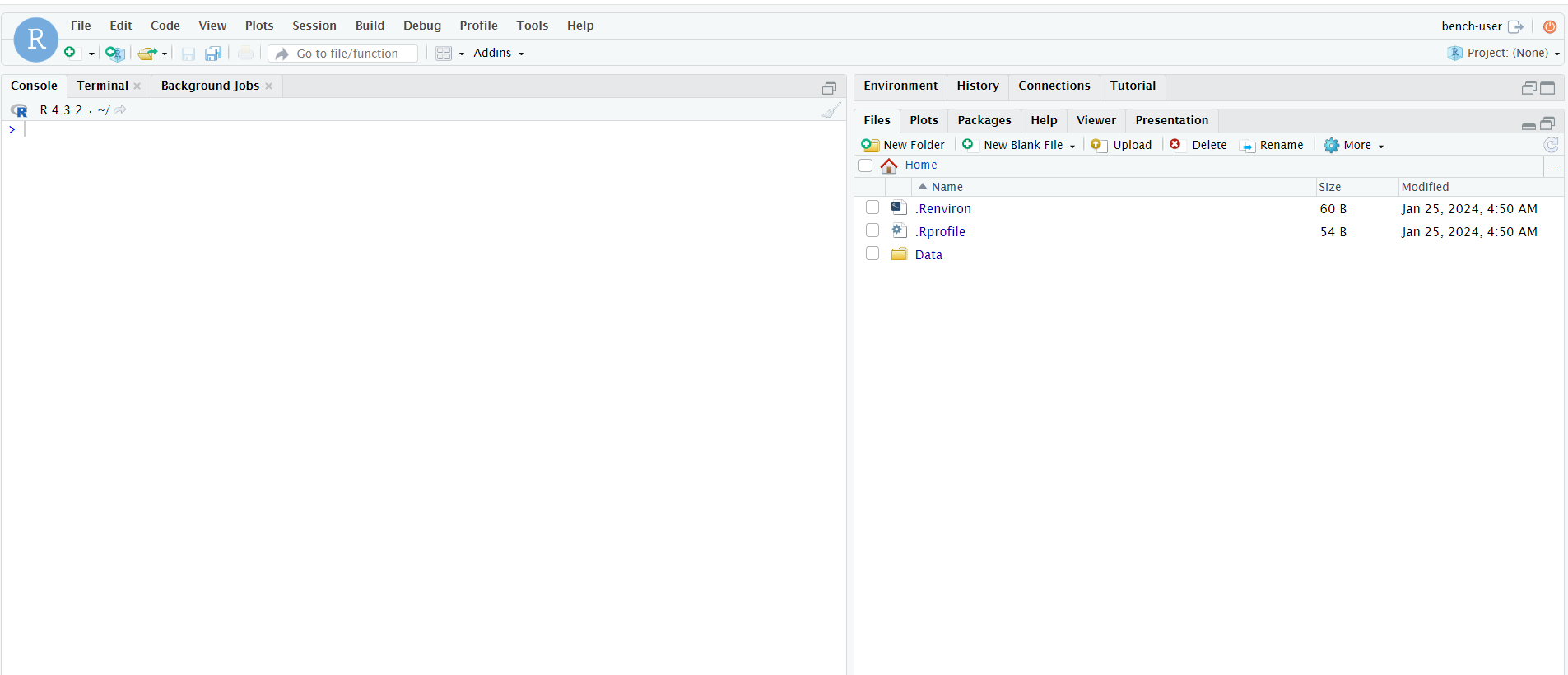

After creating a ComputeBench, the next step is to determine which IDE (which application) we would like to code in. RStudio, JupyterLab, and VS Code are all viable for R. For R, we recommend using RStudio. After connecting to your ComputeBench via the “Connect” dropdown menu, a new browser tab will pop up with RStudio.

.png)

Let's get started by loading the R packages and downloading the files we will use. For Seurat, these PBMC files are typically generated by the 10X CellRanger tool, which aligns and preprocesses data after single-cell sequencing, so we can do the follow-on analysis. To download the scRNA-seq data files, follow the R code below in your RStudio console. RStudio will then execute this code and automatically download the necessary data. The same process applies for all the plots hereafter—run the code in the console and the plots will be generated in the ‘plots’ window in the terminal.

# Load the various libraries

library (Seurat)

library (dplyr)

library(patchwork)

library(tidyverse)

# Create a 'Data' folder in the home directory to store data files

dir.create(file.path("~", "Data"))

# Destination file path where you want to save the downloaded file

destination <- "pbmc3k_filtered_gene_bc_matrices.tar.gz"

# Download the dataset from the 10x genomics website

download.file("https://cf.10xgenomics.com/samples/cell/pbmc3k/pbmc3k_filtered_gene_bc_matrices.tar.gz", destfile = destination, method = "auto")

# Destination directory where you want to extract the files

extract_dir <- "Data"

# Extract the contents of the tar.gz file

untar("pbmc3k_filtered_gene_bc_matrices.tar.gz", exdir = extract_dir)You can examine the downloaded files by examining the files pane in the right side section of your terminal.

.png)

Understanding the data

The dataset that we download above captures 2,700 single cells from Peripheral Blood Mononuclear Cells (PBMC), which include lymphocytes, monocytes, and dendritic cells [1] measured from 10x Genomics. In scRNA-seq, the the sequencing process typically generates three crucial files:

- ‘matrix.mtx’: represents the number of reads (counts) for a specific gene in a specific cell. Each row corresponds to a gene (or transcript) in the dataset and each column corresponds to a single cell (or barcode) from the dataset.

- ‘genes.tsv’: each row represents a gene through a unique ID and its gene symbol. This file helps to map the rows of the ‘matrix.mtx ’to specific genes.

- ‘barcodes.tsv’: contains the unique barcodes that identify each individual cell. Each row represents a cell with a unique barcode sequence that corresponds to the columns of ‘matrix.mtx’ to distinguish between different cells.

Seurat uses all three files to generate a unique molecular identifier (UMI) count matrix. This matrix is then loaded into a variable and initialized as a Seurat object (‘pbmc’) which stores our data as well as any processing and analysis performed on the dataset.

Run the following code to confirm that the scRNA-seq data files have been downloaded as expected. Afterwards, create a variable to store the PBMC data and initialize a Seurat object.

# Check to confirm that your files have been successfully downloaded in the correct directory and folder

list.files("/home/bench-user/Data/filtered_gene_bc_matrices/hg19")

# Store the PBMC data into a variable

pbmc.data <- Read10X(data.dir = "/home/bench-user/Data/filtered_gene_bc_matrices/hg19/")

# Initialize a Seurat object with the PBMC data

pbmc <- CreateSeuratObject(counts = pbmc.data, project = "pbmc3k", min.cells = 3, min.features = 200)

# You may get a warning indicating that the underscores, are being replaced with dashes - don't worry it won't affect our workflowData pre-processing

Once we have loaded the data, we will need to conduct standard preprocessing for later downstream analysis. These steps include quality control (QC), normalization, feature selection, and data scaling. Don’t worry if you don’t fully understand what each of these steps will do yet - they will make more sense as we go through the work.

Note: The term “feature” is commonly used through this tutorial, as well as in the field of bioinformatics. Simply put, a feature is an individual measurable piece of data, or property, within a dataset. This is a broadly used term and can encompass a wide variety of types of data depending on the context. In bioinformatics, it is often a biological characteristic such as a mutation, level of protein abundance, or methylation site. In our case, each feature refers to a gene.

Now that we have a picture of the preprocessing steps, we will perform QC by running the following code. This will produce visualizations of our QC metrics and give us a better understanding of their relationships. An explanation will be provided in the next subsection.

# Creating a column in our data matrix to store QC metrics for later use. For our QC, we are trying to find the percentage of reads that map to the mitochondrial genome. Poor or dying cells commonly exhibit abnormal levels of mitochondria.

pbmc[["percent.mt"]] <- PercentageFeatureSet(pbmc, pattern = "^MT-")

# Often useful to visualize the QC metrics and compare several relationships to see trends or spikes

VlnPlot(pbmc, features = c("nFeature_RNA", "nCount_RNA", "percent.mt"), ncol = 3)

# Create a scatter plot to visualize the relationship between variables

plot1 <- FeatureScatter(pbmc, feature1 = "nCount_RNA", feature2 = "percent.mt")

plot2 <- FeatureScatter(pbmc, feature1 = "nCount_RNA", feature2 = "nFeature_RNA")

# Combine the two plots onto one

plot1 + plot2.png)

Understanding QC

In Figure 5, we plot the QC metrics using violin plots, commonly used to observe the density of a population. nFeature_RNA refers to the number of genes detected in each cell and nCount_RNA refers to the total number of molecules detected in each cell. Low gene counts signify dying cells, while high counts typically indicate that the cell is a doublet or multiplet— a case where two or more cells are mistaken for a single cell during flow cytometry in single cell sequencing. In addition, we visualize the %mitochondrial reads between the genes found in the cell and the mitochondrial genome; high mt percentage is indicative of contamination or poor-quality cells.

In Figure 6, we plot the relationship between the various features. The number at the top of the plot constitutes the Pearson correlation value which is used in statistical analysis to represent the strength between a linear relationship (values range from 1 to -1). A value of -0.13 indicates a weakly negative correlation meaning that as total RNA increases, the percentage of mitochondrial RNA tends to decrease. A value of 0.95 indicates a strongly positive correlation meaning that as the total number of molecules in a cell increases, the number of detected genes also increases for most cells.

From these QC metrics, we filter out cells that have less than 200 feature counts as well as those with greater than 2500. We also filter out cells that have greater than 5% mitochondrial read counts.

pbmc <- subset(pbmc, subset = nFeature_RNA > 200 & nFeature_RNA < 2500 & percent.mt < 5)Once we’re done with QC, we can move on to normalization, as well as feature selection and data scaling.

# Once we removed the undesired cells from the data (i.e. high levels of mitochondria contamination), we can normalize the data.

pbmc <- NormalizeData(pbmc, normalization.method = "LogNormalize", scale.factor = 10000)

# Alternatively we can run the following command which achieves the same as above:

pbmc <- NormalizeData(pbmc)

# We now want to find features that exhibit high cell-to-cell variation (highly expressed in some cells, low in others).

pbmc <- FindVariableFeatures(pbmc, selection.method = "vst", nfeatures = 2000)

# Find top 10 variable features

top10 <- head(VariableFeatures(pbmc), 10)

#Visualize on plot

plot1 <- VariableFeaturePlot(pbmc)

plot2 <- LabelPoints(plot = plot1, points = top10, repel = TRUE)

plot1 + plot2.png)

Understanding Normalization, Feature Selection, and Scaling Data

We first normalize the data by dividing the counts for each gene by the total counts in the cell, followed by a multiplication using a scale factor of 10,000 and then natural log-transforming the results.

Feature selection involves identifying features that demonstrate high variability (highly expressed in some cells, low in others). Identifying these features help in focusing on highly significant genes. 2,000 features are plotted (by default in Seurat) and the top 10 gene variables are then identified. Figure 7 shows the features’ expression plotted against their variance; genes with the highest variance are labeled.

Data Scaling is performed by shifting the expression of each gene, so that the mean expression across cells is 0, and scaling the expression of each gene, so that the variance across cells is 1.

# Scale the data so that no single feature dominates the dataset

all.genes <- rownames(pbmc)

pbmc <- ScaleData(pbmc, features = all.genes)Dimensionality Reduction

We can now move on to the nitty-gritty of the analysis, starting with dimension reduction. At its core, it is a technique to simplify complex data by representing it in a lower-dimensional space, while still preserving the essential elements of the data. We first use Principal Component Analysis (PCA) which identifies the main ways (called principal components) in which the data varies the most. It then uses the principal components to reorganize the data, making it easier to see patterns and reduce the number of variables we need to consider. In this analysis, we are only focusing on the variable features that were selected and scaled in the previous section.

# Perform PCA and visualize results

pbmc <- RunPCA(pbmc, features = VariableFeatures(object = pbmc))

# Using five principal components, we can examine which genes are the most corrlated (or anti) across their single-cell dataset

print(pbmc[["pca"]], dims = 1:5, nfeatures = 5)

# Visualize the first two PCs

VizDimLoadings(pbmc, dims = 1:2, reduction = "pca").png)

# Visualize the results of the dimensional pLot

DimPlot(pbmc, reduction = "pca") + NoLegend().png)

# Create heat map of first PC

DimHeatmap(pbmc, dims = 1, cells = 500, balanced = TRUE).png)

# Create heat map of first 15 PCs

DimHeatmap(pbmc, dims = 1:15, cells = 500, balanced = TRUE).png)

# Analyze the PCA scores through a ranking of principle components based on the percentage of variance

ElbowPlot(pbmc).png)

Understanding Dimensionality Reduction

PCA works by determining loadings (‘weight’) correlating to the degree of their contribution to each principal component (PC). PCs are simply new variables that are created as linear combinations of the initial variables or features. Think of it as new labels that we can use to group or separate pieces of data together. When performing PCA, it is up to the user to determine how many principal components are required to retain the information in their data and provide an appropriate ‘reduction’. Increasing the number of PCs, may not be computationally efficient, nor be effective in adding extra value to the reduction. A common practice is to pick enough components to cover 95% of the total data variance.

Figure 8 illustrates the first two PC loading values and the respective genes. A positive loading indicates a positive correlation between that gene and the PC while a negative loading indicates an anti-correlation. Figure 9 represents the data points based on the first two PCs. One can observe groups of points that are closer together. These indicate a similarity between how they correlate to the two PCs. In other words, these genes probably have similar function or expression in regards to the entire cell. The axes are unitless and do not bear any physical significance as is the case with dimensional plots. Figure 10 and 11 shows another way PCA results can be visualized: heat maps. This is a good way to further examine the ‘heterogeneity’ of the data across different PCs, and determine what and how many PCs should be used to extract the relevant information out of the dataset.

To assist in determining how many PCs are required (i.e. determining the ‘dimensionality’ of the data), we can use an elbow plot to analyze the variance that is captured by each PC such as in Figure 12. After the first ten PCs, there is a stagnation, which suggests that further PCs do not capture additional valuable information.

Determining the dimensionality of a dataset can be challenging and require multiple iterations before landing on the desired output. It is encouraged to always try different amounts of PCs and observe how that changes the noise and final results when analyzing your data. Visualizing the effects of additional PCs through heat maps and elbow plots as described above can also be beneficial in your analysis. To help readers get a better understanding of PCA, I have included a flowchart below (Figure 13). Please note that this is a gross simplification; if you are still having difficulty grasping PCA there are an abundance of amazing resources online to learn from.

As a final note, PCA is based on linear transformations, which makes it computationally efficient and relatively straightforward to implement. It is also well-suited for datasets where linear relationships between variables are present. However, this may not always be the case as the data can exhibit nonlinear relationships or complex interactions. In such cases, t-SNE or UMAP are non-linear techniques which may be more useful at the cost of being more complex and sensitive to parameters. We will see these techniques in play shortly.

%2520(1).jpeg)

Continuing further

The rest of the tutorial involves using non-linear dimensionality reduction techniques such as UMAP, clustering cells, and finally identifying cell types through markers. We will not cover these steps in this post, but they are a good way to deepen your understanding of scRNA-seq analysis and can be explored independently by following the Satija lab tutorial.

Conclusion

The Satija tutorial provides an excellent introduction to the world of bioinformatics and scRNA-seq analysis using Seurat and R.

That being said, there are still many techniques in the grand bioinformatic toolbox yet to be explored in this post. I truly believe there is no better way of learning than doing and so I encourage you to explore the myriads of vetted datasets out there such as cellxgene, Best of all, reproducing quality data allows you to learn in a low-stakes environment. What’s the worst that can happen— you can always try again.

With the help of our ComputeBench, researchers can work directly in their browser without all the headaches of managing scientific software and cloud hardware. Benches are customizable and persistent, so you can always pick up right where you left at any point. If you have specific research goals, our team of dedicated experts are also available to help develop tailored benches and blueprints. Sounds appealing? Embark on your bioinformatics journey today using our platform with $500 worth of free credits for your first month.

References

[1] Pourahmad J, Salimi A., 2015, Isolated human peripheral blood mononuclear cell (PBMC), a cost effective tool for predicting immunosuppressive effects of drugs and xenobiotics. Iran J Pharm Res. 2015;14(4):e125405. https://doi.org/10.22037/ijpr.2015.1790.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)